Orchestrating the Future: A Human-Centric Approach to Multi-Agent Robotics

This article explores a human-centric vision for multi-agent robotics, grounded in the Physical AI paradigm and the CEDR framework developed at CogniEdge.ai. It outlines how technologies like collaborative sensing, edge AI, digital twins, swarm intelligence, and explainable interfaces can be orchestrated to create intelligent, empathetic robotic ecosystems. With real-world examples and forward-looking insights, it highlights how robots can evolve into adaptive teammates, enhancing safety, productivity, and human well-being across industries.

Madhu Gaganam

10/7/20255 min read

Imagine an electric vehicle battery assembly line where a technician, showing subtle signs of fatigue detected by a wearable sensor, is seamlessly supported by a collaborative robot. The robot slows its pace, offers a verbal prompt to rest, and intelligently reallocates its immediate tasks to a nearby automated system to maintain production flow. This is not science fiction; it is the near future of manufacturing, powered by a new paradigm in Human-Robot Interaction (HRI) that treats robots not as tools, but as partners in a cohesive, intelligent ecosystem. Companies like Tesla are already experimenting with such systems, using collaborative robots to enhance worker safety and efficiency on their Gigafactory lines.

As Physical AI — the fusion of advanced AI with physical systems — moves from research labs to factory floors, the central challenge has shifted from programming a single robot to orchestrating swarms of them. Managing fleets of humanoids, cobots, and drones requires a holistic framework that prioritizes low latency, scalable coordination, and, most importantly, a deep, intuitive connection with human counterparts. This article explores the core technological pillars, prevailing challenges, and transformative potential of a human-centric, edge-driven approach to orchestrating our robotic future.

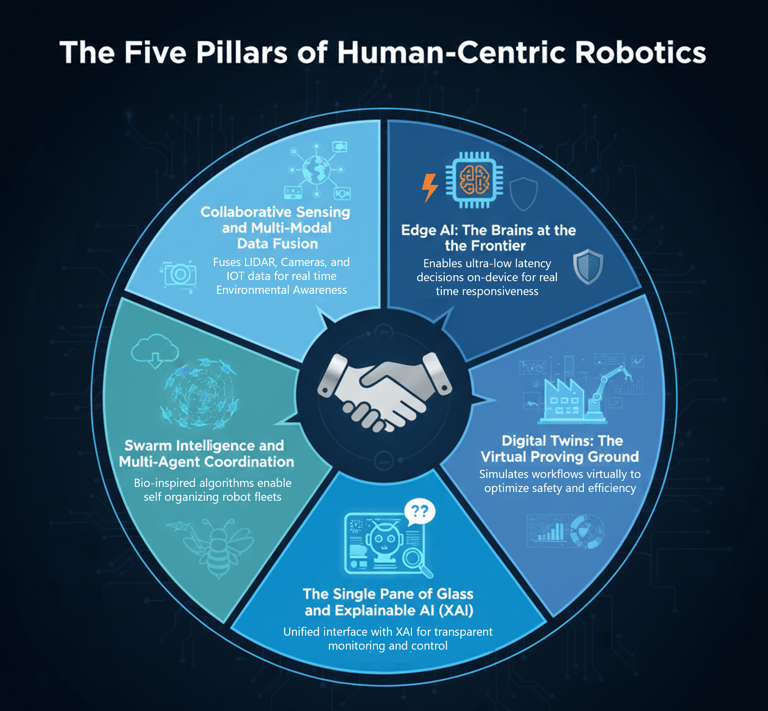

The Core Pillars of Modern Robotic Ecosystems

Building a truly collaborative robotic workforce rests on several interconnected technologies that together create a resilient, adaptive, and intelligent system. These pillars form the foundation for next-generation HRI.

Collaborative Sensing and Multi-Modal Data Fusion

Modern robots perceive the world through a rich tapestry of sensors, including LiDAR, high-definition cameras, and IoT devices. Collaborative sensing takes this a step further, allowing a group of robots to fuse their individual sensor data into a single, unified real-time model of their environment. This multi-modal data fusion creates a comprehensive situational awareness that is far more robust and detailed than any single agent could achieve. For instance, one robot’s camera feed can be combined with another’s LiDAR data to accurately map an obstacle that is partially obscured from both viewpoints, dramatically improving navigation and safety in dynamic environments. Boston Dynamics’ Spot robots, for example, use shared sensor data to navigate construction sites, ensuring precise coordination in cluttered spaces.

Edge AI: The Brains at the Frontier

While the cloud is invaluable for training complex AI models and long-term analytics, real-time interaction demands computation at the source. Edge AI places processing power directly on the robots or nearby edge servers, enabling decisions with ultra-low latency (often under 10 milliseconds in optimized setups). This is critical for tasks requiring immediate feedback, such as a cobot adjusting its grip on a delicate component or a swarm of drones coordinating their flight paths to avoid collisions. An edge-driven architecture also enhances security by keeping sensitive operational data on-premises and ensures operational continuity even if cloud connectivity is lost. Companies like Amazon leverage edge AI in their warehouse robots to process real-time navigation data, boosting efficiency in dynamic fulfillment centers.

Digital Twins: The Virtual Proving Ground

Before a single robot is deployed, its entire workflow can be simulated and optimized in a virtual replica of the physical environment. These digital twins, often powered by platforms like NVIDIA Omniverse or Siemens’ MindSphere, provide a risk-free sandbox for testing everything from task efficiency to emergency safety protocols. By simulating complex human-robot interactions, manufacturers can validate designs, predict potential bottlenecks, and train AI models on millions of scenarios, ensuring that physical deployments are safe, efficient, and predictable from day one. For example, Siemens uses digital twins to optimize robotic assembly lines in automotive plants, reducing deployment times by up to 30%.

Swarm Intelligence and Multi-Agent Coordination

Coordinating a fleet of robots requires a sophisticated planning system. Inspired by natural systems like insect colonies and bird flocks, swarm intelligence planning utilizes decentralized, bio-inspired algorithms to enable robots to work together on complex tasks. This approach is highly scalable, allowing new robots to be added to the swarm with minimal reconfiguration. More advanced frameworks are now integrating neuroscience principles like the Free Energy Principle (FEP), which allows a system to proactively adapt to maintain equilibrium and minimize “surprise” or deviation from its goals — moving from reactive control to predictive, self-organizing autonomy. DHL, for instance, employs swarm intelligence in its logistics drones to optimize delivery routes dynamically, adapting to real-time traffic and weather changes.

The Single Pane of Glass and Explainable AI (XAI)

To make these complex systems manageable, human supervisors need a unified interface — a single pane of glass — for monitoring, controlling, and orchestrating the entire robotic fleet. This central dashboard provides real-time insights into system health, productivity, and individual robot performance. Crucially, this interface must be powered by Explainable AI (XAI), which makes the robots’ decision-making processes transparent. When a robot deviates from a task, XAI can provide a clear reason, building trust and enabling operators to diagnose and resolve issues effectively. General Electric’s Predix platform, for example, uses XAI-driven dashboards to monitor industrial robots, helping operators understand and optimize complex workflows.

The Human Element: Towards Symbiotic Collaboration

The ultimate goal of this technological convergence is to create a truly symbiotic relationship between humans and machines. This requires moving beyond simple collaboration to a state of proactive, intuitive partnership. Advanced HRI systems now leverage Large Language Models (LLMs) to understand natural language commands, interpret human intent from tone and gesture, and respond with empathetic, context-aware actions.

The concept of neuroadaptive manufacturing represents the pinnacle of this vision. By integrating biometric sensors (like EEG headsets) into the collaborative sensing network, robots can perceive a human’s cognitive state. A robot can detect if its human partner is fatigued, distracted, or overloaded and dynamically adapt its behavior to assist. This could involve slowing down, taking over a complex part of a task, or providing helpful guidance. This level of empathy transforms the robot from a mere tool into a perceptive and supportive teammate, enhancing both worker well-being and overall productivity. Pilot programs at companies like BMW are exploring neuroadaptive interfaces to improve worker-robot synergy in assembly tasks.

Navigating the Challenges on the Horizon

Despite immense progress, several significant challenges must be addressed to realize the full potential of human-centric robotics.

Data Integration and Standardization: Fusing data from heterogeneous sensors and systems remains a complex technical hurdle. Establishing standardized protocols for data sharing is essential for seamless interoperability.

Safety, Security, and Trust: As robots become more autonomous, ensuring they are safe and secure from cyber threats is paramount. Building public and worker trust requires transparent decision-making (via XAI), robust safety certifications, and clear accountability frameworks.

Scalability and Adaptability: While current systems are becoming more scalable, the ability for robots to adapt to new tasks without extensive reprogramming is a key challenge. Frameworks that allow for task definition through natural language or demonstration are crucial for flexible automation.

Ethical Considerations: The use of cognitive monitoring raises important ethical questions about privacy and data security. Beyond these, concerns about job displacement and potential AI biases in decision-making could exacerbate workplace inequalities if not addressed. For example, if robots prioritize tasks based on flawed training data, they might inadvertently favor certain workflows or workers, undermining fairness. Implementing these systems responsibly requires clear policies, on-device data encryption, informed consent for biometric monitoring, and a steadfast commitment to prioritizing human well-being.

The Future Vision: An Integrated, Adaptive World

The future of robotics is not in building individual, siloed machines, but in architecting intelligent, interconnected ecosystems that work in concert with humanity. By integrating collaborative sensing, edge AI, digital twins, and swarm intelligence within a human-centric framework, we are paving the way for systems that are not only highly efficient but also safe, resilient, and adaptive.

This new generation of Physical AI promises to revolutionize industries from manufacturing and logistics to healthcare and disaster response. The journey ahead involves solving complex technical and ethical challenges, but the destination is a world where technology amplifies human potential, creating a true partnership between humans and machines to build a more productive and sustainable future. What role will we play in this robotic renaissance?