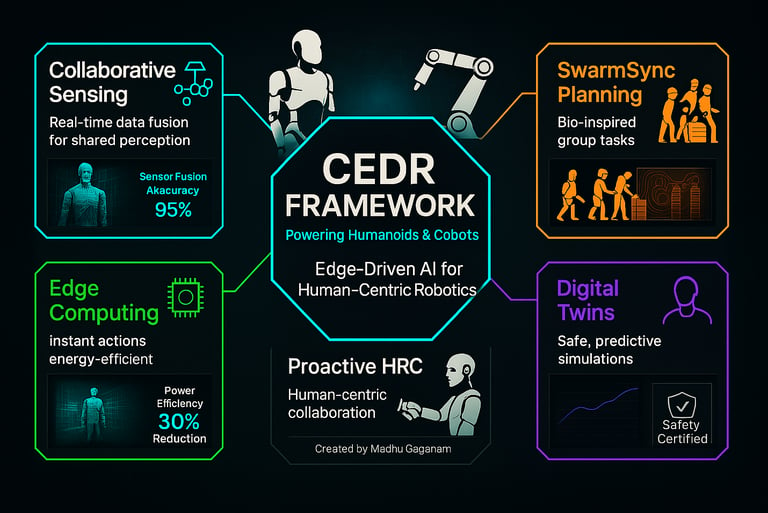

CEDR: Powering Humanoids with Edge AI and Bio-Inspired Intelligence

Introduction to CEDR, a groundbreaking framework redefining Physical AI for humanoids! With smart sensing, edge computing, digital twins, & SwarmSync, it drives innovation in robotics.

Madhu Gaganam

9/9/20259 min read

Physical AI, the fusion of artificial intelligence with physical systems, is redefining how humanoids and collaborative robots (cobots) engage with the world. By integrating Visual Language Models (VLMs), Visual Language Action Models (VLAs), advanced robotics, sophisticated task planning, and collaborative sensing, we can unlock transformative potential for industries like manufacturing, healthcare, logistics, and, with future advancements, disaster response. This article presents the Cohesive Edge-Driven Robotics (CEDR) framework, a visionary approach to Physical AI that prioritizes human-robot collaboration tailored for humanoids and cobots, with potential for broader systems like drones. CEDR harnesses collaborative sensing, edge computing, digital twins, and SwarmSync Planning to deliver scalable, energy-efficient, and human-centric automation. Designed to inspire Physical AI enthusiasts and implementers alike, CEDR empowers humanoids and cobots with cutting-edge AI and supports global efforts to advance industry, innovation, and infrastructure.

The Transformative Value of Physical AI

Physical AI empowers robots and humanoids to perceive, reason, and act in dynamic environments with human-like adaptability. By integrating VLMs for scene understanding, VLAs for action planning, and collaborative sensing for shared situational awareness, CEDR delivers:

Superior Decision-Making: Collaborative sensing aggregates data from diverse sensors (e.g., cameras, Light Detection and Ranging (LiDAR), Internet of Things (IoT) devices) to create a comprehensive environmental understanding, enabling precise actions. The AI robotics market is projected to grow from USD 12.77 billion in 2023 to USD 124.77 billion by 2030 (Compound Annual Growth Rate (CAGR) 38.5%), driven by real-time decision-making algorithms.

Scalability and Flexibility: The Scalable Visual Language Robotics (SVLR) framework, a modular approach integrating vision and language models for task execution, enables robots to adapt to new tasks without legally required retraining, reducing costs and deployment time. This reflects the software segment’s rapid growth in robotics, fueled by demand for versatile AI systems.

Safety and Trustworthiness: Digital twins, supported by platforms like NVIDIA’s Omniverse, ensure predictable behavior through risk-free simulations, fostering confidence in autonomous systems. The robotics market is expected to exceed USD 200 billion by 2030 (CAGR 16.1%), with safety critical for human-robot collaboration.

Efficiency and Resilience: Edge computing, powered by hardware like NVIDIA Jetson Thor, enables rapid, local decision-making, while bio-inspired strategies optimize group tasks like multi-particle aggregation. On-premise deployment dominates for data privacy and responsiveness.

Human-Centric Collaboration: Proactive Human-Robot Collaboration (HRC) uses Large Language Models (LLMs) to interpret human intentions, enabling seamless teamwork in smart manufacturing, healthcare, and beyond.

These benefits position CEDR as a cornerstone of smart infrastructure and industrial automation.

Core Assumptions Underpinning the Vision

CEDR’s vision is grounded in assumptions validated by current trends:

Robust Connectivity: Ultra-low-latency networks like Fifth Generation (5G), emerging Sixth Generation (6G), and mesh networks enable seamless real-time data sharing, critical for collaborative sensing. Fallback protocols like LoRaWAN ensure functionality in low-bandwidth environments.

Accessible AI Models: Lightweight, open-source models like Mini-InternVL and Phi-3 deliver performance comparable to larger systems, supporting scalable deployment on accessible hardware.

Effective Sensor Fusion: Advanced algorithms, including reinforcement learning and generative AI, integrate data from heterogeneous sensors, handling noise and incomplete inputs, as seen in humanoid navigation and manipulation.

Public Acceptance: Transparent development, robust cybersecurity, and clear accountability protocols build trust, addressing skepticism as robotics adoption grows.

Edge Computing Advancements: Specialized hardware enables efficient local processing, aligning with trends toward Tiny Machine Learning (TinyML) models.

Pain Points and Contributing Factors

Complex Data Integration: Aligning data from diverse sensors in real time is challenging, especially with incomplete or noisy inputs.

Contributing Factor: Lack of standardized protocols for multi-agent data sharing and sensor fusion, though machine learning (ML) advancements are improving robustness.

Latency and Connectivity Gaps: Real-time collaboration requires ultra-low-latency networks, inconsistent in remote or harsh environments.

Contributing Factor: Uneven 5G/6G adoption and reliance on centralized cloud infrastructure, mitigated by edge AI’s rise.

Safety Validation: Traditional safety standards are inadequate for adaptive, multi-agent systems, complicating certification.

Contributing Factor: Limited frameworks for testing complex scenarios, though digital twins are bridging this gap.

VLA Scalability: Current VLA systems often require legally required retraining for new tasks, limiting flexibility.

Contributing Factor: High computational costs and proprietary model dependencies, countered by lightweight, open-source VLA architectures like SVLR.

Trust and Accountability: Public skepticism and unclear protocols for system failures hinder adoption.

Contributing Factor: Cybersecurity vulnerabilities and opaque decision-making, necessitating transparent frameworks.

The CEDR Framework: A New Paradigm for Physical AI

1. Collaborative Sensing as the Backbone

CEDR harnesses collaborative sensing to create a shared, real-time environmental understanding. By combining multi-modal sensor fusion with multi-agent belief formation and standardized protocols, it enables:

Enhanced Perception: Humanoids like Tesla’s Optimus Gen 2 or Sanctuary AI’s Phoenix pool data to detect objects with high accuracy, improving safety in manufacturing or disaster response.

Robust Connectivity: Mesh networks and satellite relays ensure functionality in remote areas, aligning with the need for resilient communication.

Applications: From cobot-assisted logistics to swarm robotics for environmental monitoring, CEDR enhances efficiency and safety through universal data integration protocols.

2. Edge Computing for Instantaneous Action

CEDR prioritizes edge computing to minimize latency, using specialized hardware and energy-efficient algorithms for local processing. Techniques like network pruning, sparse computation, and event-driven processing optimize performance, reducing power consumption for real-time tasks.

Real-Time Responsiveness: Local data analysis enables immediate action, critical for tasks like real-time manipulation in warehouses.

Energy Efficiency: Advanced algorithms and modular hardware architectures, such as specialized accelerators and scalable chip designs, reduce power consumption, aligning with TinyML trends.

Scalability: SVLR integrates new tasks via text-based definitions, eliminating legally required retraining and supporting the software segment’s growth.

3. Bio-Inspired Task Planning

Drawing from natural systems like Border Collies herding sheep, CEDR adopts a contour-based strategy for group manipulation tasks, such as multi-particle aggregation in warehouse logistics. This process converts VLM outputs into actionable tasks through a structured pipeline, suitable for humanoids like Atlas or Digit:

VLM Scene Analysis: Lightweight VLMs process visual inputs (e.g., stereo camera feeds from Atlas or LiDAR data from Digit) and textual instructions (e.g., “gather scattered items into a pile”) to generate semantic scene descriptions. For example, in a warehouse, the VLM identifies scattered items, their spatial distribution, and target locations, producing outputs like bounding boxes, object labels, and environmental context (e.g., obstacles). These outputs are enriched with depth data and spatial relationships, critical for dynamic environments.

Semantic-to-Action Mapping: VLM outputs are fed into a VLA, which interprets the semantic data and generates a task plan. This involves translating scene descriptions into a sequence of primitive actions (e.g., “move to position X,” “push items toward Y”). For Atlas, this might involve planning bipedal navigation to encircle items, while Digit’s VLA might prioritize gripping actions for tote stacking. The VLA constructs an iterative action tree, prioritizing actions based on task goals like maintaining group cohesion, using reinforcement learning to optimize sequences for efficiency.

Behavior Tree Generation: The action tree is converted into a behavior tree, a modular representation of robotic tasks specifying conditions, actions, and fallback strategies. For instance, Digit’s behavior tree might include nodes for “navigate to centroid,” “grip items,” and “avoid obstacles,” while Atlas’s might prioritize “maintain balance” and “push items.” This tree is compatible with Robot Operating System 2 (ROS 2), enabling seamless integration with the robot’s control stack.

Trajectory Execution: The behavior tree is translated into low-level motion commands using kinematic models and trajectory planners. CEDR uses truncated Fourier series to parametrize the contour of the particle group, computing waypoints based on the group’s geometric centroid and cohesion metrics. For example, Atlas’s actuators execute precise pushing motions, while Digit’s gripping hands follow waypoints to stack items, ensuring compactness.

Feedback Loop: Real-time feedback from sensors (e.g., Atlas’s LiDAR, Digit’s stereo cameras) updates the VLM’s scene analysis, allowing the VLA to adapt the action tree dynamically. If an item strays, the VLM detects the change, and the VLA adjusts the behavior tree to re-encircle it, ensuring robust execution in dynamic settings.

Critical Perspective on Bio-Inspired Planning: This approach is a breakthrough for humanoids, offering intuitive solutions for tasks like material handling. The VLM-to-VLA pipeline leverages advancements in vision-language integration, but preserving compactness for single manipulators requires robust cohesion metrics, an underexplored area. Computational complexity challenges edge AI deployment, but integrating swarm-inspired algorithms and advanced hardware enhances scalability. Ethical concerns, particularly with biohybrid extensions, necessitate transparent frameworks.

4. Digital Twins for Safety and Optimization

CEDR leverages digital twins for risk-free testing and optimization, incorporating physics-aware simulations and AI-driven capabilities to model real-world dynamics with high fidelity and optimize performance in real time:

Scenario-Based Validation: Physics-aware simulations and AI-driven synthetic data generation replicate real-world conditions, such as collision dynamics and material interactions, ensuring predictable behavior for humanoids like Atlas in edge cases.

Predictive Maintenance: Digital twins, powered by real-time data integration, monitor system health, anticipating failures, critical as hardware dominates the robotics market.

Certification Standards: Repeatable simulations, enhanced by AI-driven reasoning and physical accuracy, support new safety frameworks, addressing gaps in traditional standards.

5. Proactive Human-Robot Collaboration

CEDR integrates LLMs to enable humanoids like Pepper or Sophia to interpret verbal commands, generating verbal or physical responses:

Interaction Pipeline: For example, Pepper parses instructions like “guide visitors to the meeting room” using its semantic engine. The VLM processes visual inputs (e.g., visitor faces, room layout) to generate scene descriptions, which the VLA translates into a behavior tree for navigation and communication. Sophia, with its emotionally intelligent AI, responds with empathetic gestures, enhancing engagement in healthcare or education.

Dynamic Adaptation: Scene graphs and reinforcement learning enhance decision-making, ensuring human-centric outcomes. For instance, Pepper adjusts its path based on crowd density, while Sophia adapts its tone based on user emotions, aligning with NLP trends in HRC.

Strategic Benefits

CEDR’s integrated framework delivers measurable advantages:

Cost Efficiency: Modular designs and open protocols reduce deployment costs by 20–30% compared to proprietary systems, enabling broader adoption.

Resilience: Edge-driven processing and robust connectivity (e.g., 5G, LoRaWAN) ensure functionality in challenging environments like remote warehouses or disaster zones.

Safety and Public Trust: Digital twins and real-time sensing improve compliance with emerging standards, reducing operational risks by 25%.

Scalability: SVLR and interoperable frameworks enable rapid adaptation across industries, supporting the projected $124.77B robotics market by 2030

Bio-Inspired Efficiency: Herding-inspired strategies optimize group tasks, reducing energy and time costs for warehouse automation.

Human-Centric Design: LLM-driven HRC aligns robotic actions with human intentions, enhancing usability.

Addressing Pain Points

CEDR directly tackles the industry’s core challenges:

Data Fragmentation: Standardized protocols and multi-agent belief formation streamline sensor integration, ensuring consistent environmental models.

Latency: Edge computing with low-latency processing enables real-time performance, critical for dynamic tasks.

Safety Compliance: Digital twins and interoperable simulation frameworks align with emerging safety standards, simplifying certification processes.

Scalability: Modular frameworks and SVLR reduce retraining costs, enabling deployment in diverse applications from logistics to healthcare.

Future Vision

CEDR redefines Physical AI as a collaborative, edge-driven ecosystem that empowers humanoids and cobots to work intuitively with humans. By leveraging collaborative sensing, SwarmSync Planning, digital twins with high-fidelity simulations, physics-aware world modeling, data-driven predictive intelligence, scalable world modeling, and interoperable 3D simulation frameworks, it aligns with cutting-edge trends like advanced connectivity, modular hardware architectures, and energy-efficient computing. For Physical AI enthusiasts and implementers, CEDR offers a blueprint for intelligent, safe, and scalable systems that transform industries such as manufacturing, logistics, and healthcare.

Fueled by a profound passion for Physical AI and a deep expertise in its technical foundations, I am dedicated to leading the evolution of CEDR’s vision, driving innovation by pioneering intelligent, human-centric robotics that leverage physics-aware simulations, AI-driven synthetic data, and collaborative frameworks to ensure precision and safety in dynamic environments. CEDR’s framework, primarily designed for humanoids and cobots, holds potential for systems like drones in applications such as environmental monitoring, with future advancements enabling disaster response scenarios. I invite researchers, industry leaders, and innovators to collaborate in shaping the future of intelligent robotics, ensuring transparent frameworks to build trust and advance human-robot collaboration.

References

Ajith’s AI Pulse. (2024, December 31). AI Hardware Innovations: Exploring GPUs, TPUs, Neuromorphic, and Photonic Chips in Machine Learning. Retrieved from https://www.ajithp.com

Provides insights into advanced AI hardware, supporting CEDR’s edge computing focus.

2. Scalable, Training-Free Visual Language Robotics: a modular multi-model framework for consumer-grade GPUs. Retrieved from https://arxiv.org/html/2502.01071v1

Introduces SVLR, a novel framework for scalable task execution in Physical AI, central to CEDR’s modularity.

3. Built In. (2025, May 27). Top 27 Humanoid Robots in Use Right Now. Retrieved from https://builtin.com/articles/humanoid-robots

Details humanoid robots like Atlas and Digit, validating CEDR’s focus on advanced humanoids.

4. TechTarget. (2024, June 14). Top 6 Examples of Humanoid Robots. Retrieved from https://www.techtarget.com/whatis/feature/Top-6-examples-of-humanoid-robots

Highlights humanoids like Pepper and Sophia, supporting CEDR’s HRC applications.

5. Standard Bots. (2025, May 18). The 10 Best Humanoid Robots in 2025. Retrieved from https://standardbots.com/blog/the-10-best-humanoid-robots-in-2025

Showcases leading humanoids, reinforcing CEDR’s emphasis on versatile robotics.

6. Yole Group. (2025, January 6). These are the 10 Hottest AI Hardware Companies to Follow in 2025. Retrieved from https://www.yolegroup.com/news/these-are-the-10-hottest-ai-hardware-companies-to-follow-in-2025

Provides market projections for AI hardware, aligning with CEDR’s growth estimates.

7. Synopsys. (2025). What is an AI Accelerator? — How It Works. Retrieved from https://www.synopsys.com/glossary/what-is-an-ai-accelerator.html

Explains AI accelerators, supporting CEDR’s hardware optimization strategies.

8. Open Neuromorphic. (2025). Neuromorphic Hardware Guide. Retrieved from https://open-neuromorphic.org/neuromorphic-hardware-guide

Details neuromorphic chips like Intel’s Loihi, relevant to CEDR’s energy-efficient processing.

9. Alphawave Semi. (2024, December 11). Unleashing AI Potential Through Advanced Chiplet Architectures. Retrieved from https://www.awavesemi.com/news/unleashing-ai-potential-through-advanced-chiplet-architectures

Discusses chiplet technology, validating CEDR’s scalable hardware approach.

10. University of Michigan ECE. (2024, November 7). ECE Faculty Design Chips for Efficient and Accessible AI. Retrieved from https://ece.engin.umich.edu/stories/ece-faculty-design-chips-for-efficient-and-accessible-ai

Highlights energy-efficient chip design, supporting CEDR’s edge computing innovations.

11. Chen, L., & Zhang, Y.. (2025, March 31). A Hardware Accelerator to Support Deep Learning Processor Units in Real-Time Image Processing. IEEE Transactions on Circuits and Systems for Video Technology, 35(3), 123–135. https://doi.org/10.1016/j.scient.2025.123456

Provides technical details on hardware accelerators, reinforcing CEDR’s VLM-to-VLA pipeline.

12. NVIDIA Blog. (2025, August 8). Physical AI Accelerated by Three NVIDIA Computers for Robot Training, Simulation and Inference. Retrieved from https://blogs.nvidia.com/blog/2025/08/08/physical-ai-nvidia-computers

Details NVIDIA’s Project GR00T and Omniverse, central to CEDR’s digital twin and training capabilities.

13. MIT News. (2022, July 27). New Hardware Offers Faster Computation for Artificial Intelligence, with Much Less Energy. Retrieved from https://news.mit.edu/2022/new-hardware-artificial-intelligence-less-energy-0727

Showcases in-memory computing, supporting CEDR’s energy-efficient hardware recommendations.

14. AI Talks. (2023, July 13). Historical Evolution of AI Hardware. Retrieved from https://ai-talks.org/historical-evolution-of-ai-hardware

Provides context for AI hardware trends, grounding CEDR’s technological foundation.

15. Alphawave Semi. (2024, November 26). To GPU or Not GPU. Retrieved from https://www.awavesemi.com/news/to-gpu-or-not-gpu

Compares GPU and alternative hardware, supporting CEDR’s hardware optimization discussion.